Were the Debates Scripted?

Using language models to assess the predictability of Harris, Trump, Vance, and Walz's debate remarks.

The election is ten days away. While it may feel like we know everything there is to know about each candidate, really all we know are two versions of each candidate: the carefully rehearsed image crafted by their campaign, and the demonic counterpart imagined by their opposition. For their most genuine appearance we may have to look back a few weeks (or months) at a moment when they were thrust into the spotlight and forced to deal with tough questions in real-time: the presidential and vice-presidential debates.

Still, political debates are tightly controlled affairs. The campaigns negotiate hard with prospective television hosts to secure the most predictable conditions for their race horse and spend weeks grooming their star for the big moment. The end result is that scripted talking points and rehearsed answers are notoriously par for the course. But every so often, a candidate throws a curveball—a line or a word that surprises the room, perhaps even the nation, for a split second. This is why we watch debates: these moments reveal something truer about the candidates than any stump speech would allow.

Where one hears surprise, one might also hear missed prediction. Nowadays, we quantify predictions for just about anything, and, unless you’ve been living under a rock, you know we’ve been doing so for language: that’s how tools like Claude and ChatGPT work. In this post, we’ll explore whether it’s possible to zero in on these moments of surprise using language prediction engines like these.

But before we dive in, let’s examine whether this is even worth doing in the first place.

Do missed predictions really tell us anything?

Some of the most intriguing news stories capture moments where predictions missed the mark: the underdog overtakes the favorite, an economic crash defies forecasts, or a criminal is exonerated based on new evidence decades later.

But some have criticized the tendency of journalism to over-index on such surprises. For instance, Nassim Nicholas Taleb has said: “The more time you spend consuming news, the more you believe in the narrative fallacy—the need to fit explanations to every piece of randomness, every outlier event.” In other words, not every surprise is worth learning from.

At its worst, the news industry does indeed notoriously overreact to insignificant events. But at its best, it highlights where systems have failed, and it prevents us from growing complacent with them. By challenging the accepted wisdom of the status quo, good reporting forces us to learn new paths forward. “Journalism is the world’s greatest continuing education program,” writes Scott Pelley. This applies both to individuals and to society at large.

Perhaps journalism draws its power from focusing on the stories our common sense most struggled to accurately predict. In machine learning, there is a name for this type of information retrieval mechanism: active learning1.

Science Interlude: Some useful sampling mechanisms to know about

Active learning is focused on identifying the best data points for a model to learn from next. Two common techniques are uncertainty sampling and diversity sampling.

Uncertainty sampling zeroes in on data points the model is least certain about, aiming to reduce the model’s error on hard-to-predict cases. One such method is entropy sampling:

Uncertainty about what label to assign to a data point x can be measured as entropy H(x), which is highest when all outcomes are equally probable. In other words, it’s maximized if your model thinks the probability your data point x corresponds to a label yi is exactly the same for all labels (e.g. for all possible values of your label index i — from 1 to k if you have k labels).

One way of looking at it: if you have a picture that you are 100 percent sure is a dog (p(ydog|x) = 1), then your log-probability is log(100%) = 0. Meanwhile, the other possibilities you were considering must all be 0 percent probable (for instance, p(ycat|x) = 0). So your total entropy will be 0. But if you’re only 50 percent sure your picture is a dog (p(ydog|x) = 0.5), then your entropy is at least -0.5 * log(0.5), which is 0.15 — so more uncertainty will mean higher entropy.

Diversity sampling collects a broad set of examples, ensuring the model isn’t overfitting to a narrow view. It seeks to pick a set of data points 𝒮 that are maximally different from one another:

Here, 𝒟 is your “domain,” the world from which all of your data points are being sampled, and n is the desired size of your sample. d is a function that defines how different two data points xi and xj are from each other. (That big Σ just means we are summing over all possible pairs of sampled points, and the

argmaxmeans we are picking the sample that maximizes this sum)

If you were to apply this to picking three maximally different U.S. cities and decided to use distance as a measure of how different U.S. cities were, your score would be maximized by picking three cities that were very far from each other: say Seattle, Boston, and Houston.

In both of these dynamics, the goal is to find data points that your current model is likely to be incorrect about so that it may learn more quickly.

Likewise, journalism known to focus on both unexpected and underrepresented stories: scoops and front-page news on one hand, investigations into overlooked social problems on the other. Much like active learning this fulfills a dual role of exploring the unknown and painting a complete picture of reality. Thereby it contributes priceless value to our information ecosystem.

So yes, spotlighting surprises may come at risk of reading tea leaves at times. But it's also key to what makes journalism so effective: presenting information we would do well to learn from.

Now, is it worthwhile to do the same — highlighting surprise — when analyzing a political speech or debate? Specifically, can we identify statements worthy of more careful consideration just by observing the most unexpected utterances? Prediction markets have attempted to play with this idea, inviting users to predict whether a candidate will say a certain word or phrase during key events — on Manifold Markets, there are betting markets focused on Kamala-isms, Trump-isms, and even Walz-isms. (Vance-isms are a glaring omission, though his verbal ticks have not gone unnoticed by other sources.)

But what if we take this a step further and use language models for the task?

Language models are, of course, imperfect mirrors of our collective thought—they carry the biases of their training data. But as amalgamations of massive human inputs, they’re gradually becoming proxies for what “the average person might think.” Consider how Dan Hendrycks used ChatGPT to illustrate bias in the wording of a major public opinion poll about California Senate Bill SB-1047 (whether to regulate frontier A.I.). This kind of analysis is a glimpse into how large lanuguage models, also known as LLMs, can reveal expectations—and deviations from those expectations—encoded in language.

Aligned Forecast™2: as these models advance, we’ll see AI tools used in courtrooms as judges, or even as lawyers and prosecutors. Our bets are in on Manifold Markets at a 40 percent chance and 60 percent chance respectively by 2030. Please align this forecast by trading with or against us!

This suggests a particularly useful feature of LLMs: their ability to represent quantified expectations around language. But unfortunately the informativeness of those expectations can expire, as all of these models are trained only on information available up to a certain point in time, known as a knowledge cutoff. It might not tell us much whether ChatGPT was surprised by what Kamala Harris said in the debate, as ChatGPT might not even know that she is the presidential candidate. So how do we keep these models up to date?

Open-Source LLM Revolution

Thanks to the open-source boom in LLMs, we now have access to not only high-quality language models but also the tools to further train them on specific data. Imagine, for instance, fine-tuning models to capture the nuances of Kamala-isms, Walz-isms, Trump-isms, and Vance-isms. By comparing a model’s predictions before and after this fine-tuning, we can gain insight into how it adapted and, more importantly, identify truly the most surprising statements for each speaker.

This allows us to dive deeper than surface-level impressions. Instead of just noting who said what, we can look at who said what unexpectedly: when the candidates broke character, or even revealed something they didn’t mean to.

Science Interlude #2: How LLM fine-tuning works

For the more technically inclined, here’s a quick dive into the tech powering this approach:

Matrices: At their simplest, you might have heard, LLMs are just a large computational graph (an ordered sequence of operations) made up of matrices, which are themselves just large arrays of numbers. These numbers are the foundation for how models process language.

There are three main types of matrix in an LLM: embedding matrices, attention matrices, and feature matrices.Embedding matrices are the simplest type. They’re a lookup table representing every common symbol, word, or phrase a text might include. Each is mapped to a learned list of numbers meant to represent what it’s likely to mean3. When given a piece of text that’s, say, 1000 of these segments long, these matrices are used to translate the text into a stack of 1000 vectors4. The maximum number of such vectors your model will accept is known as its “context window.”

Attention matrices represent connections between these N segments. For each segment, how much do the previous segments affect their meaning? Simplifying things a great deal (and without going into how the content of these matrices are computed and learned), the entry in, say, the fifth row and second column might tell you how much that the meaning of the fifth word depends on the meaning of the second word. In other words, the fifth row tells you how important each word in the sentence is to the fifth word. “She threw a perfect pitch, he has perfect pitch, they gave a perfect pitch.” In this case, whether “pitch” was preceded by “threw,” “has,” or “give” strongly determines what it might mean.

In each “layer” of the LLM, several of these matrices are stored alongside each other so as to learn all the separate ways in which words might connect to one another (subject-verb, possession, disambiguation, causality, long-range dependencies).Feature matrices are more nebulous and difficult to interpret. These contain all the information required for the LLM to adequately represent what these connections might mean. Each “layer” of the language model contains both feature and attention matrices. These layers follow one another in a pipeline of operations so that simpler types of connections can be used to inform more difficult ones. Thus, concepts can be built up in gradual complexity, with each subsequent operation providing the model with new mechanisms for interpreting the meaning of the sentence.

Transformers: The backbone of modern LLMs, these are what the T in GPT stands for. Transformers are attention-based architectures that allow models to selectively “focus” on relevant parts of input. The aforementioned attention matrices are the cornerstone of this dynamic, and are the key innovation that was introduced by transformer architectures. By learning attention scores between words, transformers can capture relationships and context, making them powerful for processing sequential data such as sentences.

Fine-Tuning with Low-Rank Adapters: Fine-tuning traditionally requires huge computational power, so a common technique is to use adapters, which efficiently update only some of the numbers in the massive graph of matrices represented by an LLM. Often, these will be close to the last layer of the model, to have more direct influence on how the final output is computed.

Another option entirely is to simply keep the numbers in the LLM fixed and inject a new set of matrices at strategic points in the model, say, adjacent to each existing matrix. This novel approach is what’s known as Low-Rank Adaptation (LoRA). Since you’re doing this to make fine-tuning faster, you want these matrices to be as small as possible, in other words low-rank.

But since you want the inputs and outputs to match up with whatever matrix you’re inserting it next to in the network (a matrix whose input and output vectors, say, both have dimension 500), you’ll need two matrices: one to bring these down to a small size, say 5 (a 500⨉5 matrix), and another to bring these back up to the matching size (a 5⨉500 matrix). Note that these two matrices would be much smaller than a massive 500⨉500 matrix. That’s pretty much it, now you know how Low-Rank Adapters (LoRA) work!

Log-Probabilities and Perplexity: These are metrics for assessing language models. Remember the entropy calculation in our first science interlude? Log-probabilities measure the logarithm of what likelihoods the model is assigning to words in a given context — in other words the log(yi|x) terms that are spit out by this long chain of matrix operations. Meanwhile perplexity quantifies a model’s overall uncertainty.

As you can see, perplexity simply takes the average of all your model’s output log-probabilities across the words in the text you are evaluating it in, then exponentiates it (e.g. raises two to the power of this average log-probability). It’s interpreted to mean the average level of surprise a model encountered when reading a text: lower perplexity suggests a model that’s well-trained for its data, making it an important indicator of predictive accuracy.

Methodology

The technical insight behind this experiment is that by fine-tuning models on candidate-specific language patterns — all the Kamala-isms, Walz-isms, Trump-isms, and Vance-isms, as well as all of their pet issues and viewpoints — the way the model’s log-probabilities shift before and after fine-tuning will tell us something about where they deviated most from their past selves. The result: a calibrated sense of which statements the model deems “normal” for each speaker and which ones stand out as unexpected or surprising.

Training Data

The training set comprised of data from various sources, focusing on transcripts, speeches, and public statements for each candidate. For Vice President Kamala Harris, that included a Kaggle transcript of her 2020 debate with Mike Pence, a CBS interview from 2023, a CNN interview from August, an NYT transcript of her convention speech, and a Rev transcript of a campaign event the week before the debate. The data was sourced before the debate (and before her recent media blitz), so it was a little thinner.

For Donald Trump, it involved the CNN transcript of his June debate with Joe Biden, and the 13 most recent Trump rally transcripts on Rev (including rallies in Florida, Michigan, and Arizona) at the time of the debate. Unsurprisingly, there was an abundance of available remarks to train on for him.

For J.D. Vance, good data was harder to find, but there was a C-SPAN transcript of his 2022 Senate debate with Tim Ryan, a transcript of a recent religious event he spoke at, and an NYT transcript of his convention speech.

For Tim Walz, there was a C-SPAN transcript of his gubernatorial debate with Scott Jensen, a Time transcript of his convention speech, and a White House transcript of a recent campaign event.

Test Data

The test sets used for evaluating progress should not be all that surprising: they were the ABC News transcript of the Trump-Harris debate and the CBS transcript of the Vance-Walz Debate. The fine-tuned model checkpoints that performed best on this test data were selected for the analysis that follows.

Model

The model used was the base version of Meta Llama 3.1, an eight-billion parameter LLM that’s about the largest size a single Tesla A100 GPU can fine-tune using LoRA. This was partly to keep costs down — Google Colab Pro+ comes with roughly 150 hours/month of A100 GPU time, which at only $50/month is probably the best deal for compute out there.

So let’s pinpoint the most surprising utterances (specifically, only the actual content of what they said) for each candidate—moments where they most diverged from their usual language patterns, revealing rhetorical shifts, tensions, or unexpected agreement.

J.D. Vance

"Me too, man."

Context: After Walz had just said, “Well, I've enjoyed tonight's debate, and I think there was a lot of commonality here. And I'm sympathetic to misspeaking on things.”

Analysis: This capped the night’s surprisingly cordial tone, and left viewers with a lasting impression of the sense of unexpected agreement that eventually defined coverage and analysis of the debate.

"Margaret, my point is that we already have massive child separations thanks to Kamala Harris' open border.

Context: Vance was responding to a question by Margaret Brennan on family separation at the border: “Governor, your time is up. Senator, the question was, will you separate parents from their children, even if their kids are U.S. citizens?”

Analysis: Vance pivots on an issue he is usually strong on — the border — implicating Harris instead of addressing the original question. This was a particularly telling deflection, as he never went on to rule such a policy out.

“And then you've simultaneously got to defend Kamala Harris's atrocious economic record, which has made gas, groceries, and housing unaffordable for American citizens.”

Context: Vance is shifting gears to attack after a set of agreeable statements from Walz on the economy: “Much of what the senator said right there, I’m in agreement with him on this,” said Walz in his previous response, which focused on bringing jobs back into the United States.

After a few exchanged barbs, Vance seems to respond in a friendly tone — “Tim, I think you got a tough job here because you’ve got to play whack-a-mole,” but moves on to criticize Harris on the economy in the above quote.

Analysis: Vance is performing a rhetorical trick in this response that may have tripped up the LLM. By expressing pity for Walz, Vance manages to appear friendly toward him, while undermining his adversary’s running mate.

These statements and others reveal Vance’s debate strategy: alternating between sharp criticism and moments of cordiality. This enabled him to deflect aggressively with attacks against Harris where needed, while maintaining a veneer friendliness toward his opponent to keep him off balance. By accounts of the post-debate polls, it looks like it worked.

Tim Walz

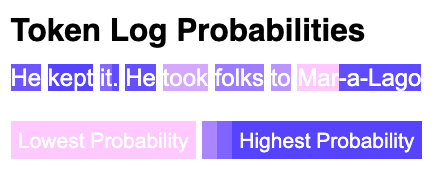

"He kept it. He took folks to Mar-a-Lago."

Context: After defending Harris’ economic plan, Walz mentioned a promise Donald Trump has made (though doesn’t clarify what the promise was). After giving him credit for keeping this promise with the statement above, he continues onto reveal what the promise was and who it was to: tax cuts to the wealthy members of Trump’s Florida club.

Analysis: The mention of the private club is unexpected for a candidate like Walz in the middle of a policy discussion, and perhaps serves as a way to draw attention to the unorthodoxy of how Trump allegedly sources his policy ideas.

"Their Project 2025 is going to have a registry of pregnancies."

Context: Walz raised this claim while discussing women and girls who have been adversely by abortion bans.

Analysis: Walz uses striking verbiage that could be seen as riskier language than his typical debate style. Once again he does so to draw attention to the strangeness of his opponents’ alleged policy influences. In this case, he’s playing on the same themes as his much-discussed “weird” line of attack, this time implying weirdness through an unexpected turn of phrase.

"But let's go back to this on immigration."

Context: This pivot followed an attack by Vance blaming the Biden-Harris administration’s immigration policy for the opioid crisis: “I don't want people who are struggling with addiction to be deprived of their second chance because Kamala Harris let in fentanyl into our communities at record levels,” said Vance in his previous remark.

Analysis: Walz is showing a surprising keenness to talk about immigration, probably as a way to show strength on an issue considered central to this campaign.

These moments reveal Walz’s reliance on riskier language than he typically uses to project strength and redirect attention to the strangeness of his opponents’ policy influences.

Kamala Harris

"In one state it provides prison for life."

Context: Harris was discussing the repercussions of restrictive abortion bans across various states: “And now in over 20 states there are Trump abortion bans which make it criminal for a doctor or nurse to provide health care.”

Analysis: Harris introduces a line of attack she had not previously often used about state abortion bans, centered on unusual punishment for healthcare providers, rather than repercussions for pregnant women.

"I absolutely support reinstating the protections of Roe v. Wade."

Context: Moderator Linsey Davis had just asked Kamala Harris, “would you support any restrictions on a woman's right to an abortion?”

Analysis: Harris uses an unexpected turn of phrase to respond to the question in the affirmative and frame her support for Roe v. Wade as a positive.

"Because he preferred to run on a problem instead of fixing a problem."

Context: Harris was discussing the immigration bill her administration had sought to pass: “That bill would have put more resources to allow us to prosecute transnational criminal organizations for trafficking in guns, drugs and human beings. But you know what happened to that bill? Donald Trump got on the phone, called up some folks in Congress, and said kill the bill. And you know why?”

Analysis: Harris uses striking verbiage to attempt to land a zinger and make a core narrative to her argument on immigration stick with voters: that she supported reform, and it’s her opponent who blocked it.

Harris’s most notable statements were direct and stark, clearly intended as ways to emphasize the contrast between her and her opponent. This was especially important given that the debate was not only an opportunity to land attacks against Trump, but also an opportunity for voters to get to know her better.

Donald Trump

"We have wars going on with Russia and Ukraine."

Context: Trump was discussing international conflicts and the U.S. role on the global stage: “They don't understand what happened tous as a nation. We're not a leader. We don't have any idea what's going on. We have wars going on in the Middle East. We have wars going on with Russia and Ukraine. We're going to end up in a third World War.”

Analysis: This statement is surprising because it frames the Russia-Ukraine conflict as though it directly involves the U.S., and seems to assign equal responsibility to an adversary, Russia, and an ally, Ukraine.

"Now she wants to do transgender operations on illegal aliens that are in prison.”

Context: Trump is continuing on a line of attack that Harris’ approach to law enforcement is unconventional: “She went out — she went out in Minnesota and wanted to let criminals that killed people, that burned down Minneapolis, she went out and raised money to get them out of jail. She did things that nobody would ever think of.”

Analysis: Such rhetoric might not be unexpected from Trump, but perhaps the exact phrasing here was. He combines multiple classically left-wing ideas to paint a scenario that sounds absurd. This emphasizes how misplaced he believes Kamala Harris’ priorities to be.

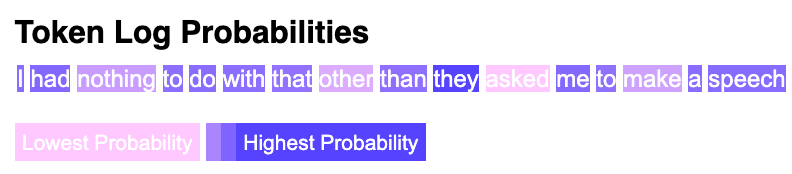

"I had nothing to do with that other than they asked me to make a speech."

Context: David Muir had just asked Trump about his involvement in the January 6th insurrection: “Is there anything you regret about what you did on that day? Yes or no.”

Analysis: Trump is on the backfoot here, deflecting responsibility rather than responding emphatically — a surprising reaction from a typically defiant candidate.

Trump’s most surprising moments were indicative of his unconventional worldview, and of a debate performance marked by wayward comments that were unexpected even from a temperamental candidate like him. Indeed, it was another such moment — his comments about Haitian immigrants eating pets — that received the most press coverage in the aftermath of the debate.

Conclusion

Analyzing debates with fine-tuned language models reveals uncharacteristic moments that went unnoticed by the press coverage after the debate. By focusing on surprising utterances, we gained insights into when the candidates deviated from their typical speech patterns, spontaneous moments that often revealed a lot about their strategy going into the debate. This method show promise in providing a new lens to interpret political discourse.

These missed LLM predictions often matched the narratives that commenters ascribed to the debates in their aftermath, showing that these weren't just anomalies, but valuable signals of a candidate’s thinking, adaptation, and performance under pressure.

It’s also worth noting that this methodology is no different from how active learning samples new data points, and the debate snippets we examined are the very training examples that would have been surfaced next in an active learning pipeline. Once again, the unexpected holds the key to learning something new.

This post demonstrates how LLMs can offer a bridge from the quantitative to the qualitative, allowing us to ascribe objective predictive methodologies even to subjective phenomena like political language and speech. That’s very much in line with what this newsletter will continue to explore in future: ways that predictive technologies can serve democracy and politics in new ways, so please subscribe if that’s something that sounds interesting to you!

Last but not least, here is the link to the analysis in Colab — comments are enabled, so please add thoughts and questions if you have any.

The approach definitely has room for improvement: the training data was uneven between candidates due to limited availability, the model used was small due to computational constraints, and the metrics were simplistic for something as nuanced as political debate. In future, it might be worth exploring advanced model interpretability techniques such as sparse cross-coders, which make it possible to extract the meaning of all the learned representations in a fine-tuned model, as compared to a base model.

If you have any other ideas for improvement, please share them in a comment, and, as always, thanks for reading!

Some might also compare journalism to anomaly detection, a similar sampling strategy, but one that doesn’t require a baseline model. This probably makes it less apt as an analogy as journalism does not operate in a vacuum.

These forecasts will be peppered into future posts and pegged to actual betting market positions — as a way of holding the authors accountable to their predictions.

Think of a giant Excel spreadsheet with a row for every word in the English dictionary.

A stack of vectors: in other words, another matrix. Every layer of a deep learning model is a series of matrix operations, for example multiplying two matrices, which then output a new matrix. This matrix is then fed into the next layer.